Navigating the digital landscape of a WordPress website can feel like exploring a complex maze. Among the myriad of tools and settings that influence your site’s visibility, the “robots.txt” file emerges as a silent yet powerful guardian, guiding search engines on how to crawl and index your content. Understanding how to effectively utilize the robots.txt in WordPress is crucial for enhancing your site’s SEO strategy and safeguarding precious resources.

In this listicle, we’ll delve into 10 essential tips that will empower you to maximize the potential of your robots.txt file. From optimizing search engine crawling to preventing the indexing of unwanted pages, each tip is designed to provide clarity and actionable insights. By the end of this article, you will not only grasp the significance of the robots.txt in WordPress but also gain practical knowledge that can significantly enhance your website’s performance and visibility in search results. Embrace the opportunity to refine your WordPress SEO strategies with these essential insights!

1) Understand the Importance of Robots.txt

Why Robots.txt Matters for Your WordPress Site

Robots.txt is a critical file for any WordPress site owner aiming for effective search engine optimization (SEO). This simple text file serves as a communication bridge between your website and search engine crawlers, informing them which pages or sections of your site they can access. Without this file, crawlers might waste their time on pages that offer little value, such as admin areas or duplicate content.

Control Over Crawler Behavior

By specifying rules in your robots.txt file, you can exert control over how search engines interact with your site. This can lead to a more efficient indexing process. Consider these advantages:

- Minimize Crawl Budget Waste: Search engines allocate a crawl budget to each website, which is the number of pages they will crawl in a given time frame. By disallowing low-value pages, you ensure that crawlers spend their efforts on your best content.

- Guard Sensitive Information: Protect critical areas of your site by disallowing access to scripts, login pages, or personal data sections. This keeps sensitive information secure while guiding crawlers to essential content.

- Enhance SEO Strategy: A well-structured robots.txt file can boost your site’s SEO by prioritizing which pages you want indexed, effectively helping you align with long-tail keyword strategies.

Common Misconceptions

Many WordPress users misunderstand the robots.txt file’s purpose, thinking it only serves as a gatekeeper for search engines. However, it can also prevent duplicate content problems, guide crawlers to the mobile-friendly version of your pages, and affect your link equity flow. Avoid these pitfalls:

- Assuming “Disallow” Means “No Index”: The Disallow directive in a robots.txt file instructs crawlers not to visit a page, but it does not prevent it from being indexed if there are external links pointing to it.

- Ignoring Crawl Permissions: Always check the robots.txt file after publishing new content. Adjust crawl permissions as necessary, especially for newly launched pages that you want to be indexed immediately.

Sample Robots.txt Structure for WordPress

| Directive | Example | Purpose |

|---|---|---|

| User-agent | User-agent: * | Applies the rules to all crawlers. |

| Disallow | Disallow: /wp-admin/ | Prevents crawlers from accessing admin pages. |

| Sitemap | Sitemap: https://yourwebsite.com/sitemap.xml | Provides the location of your XML sitemap. |

As you optimize your WordPress site with a well-crafted robots.txt file, remember that every directive should serve a purpose. Regularly review and update it based on your site’s evolving content and SEO strategy. This proactive measure will help you maintain a healthy and efficient search presence while maximizing your site’s potential in search engine results.

2) How to Access and Edit Your Robots.txt File

Locating Your Robots.txt File

To begin accessing your robots.txt file in WordPress, it’s important to understand its location. By default, this file resides in the root directory of your website. You can easily access it by typing yourwebsite.com/robots.txt into the address bar of your browser. If it’s present, you’ll see the directives set for crawlers. If it doesn’t exist, WordPress will automatically generate a default one for you, allowing for basic control over how search engine bots interact with your site.

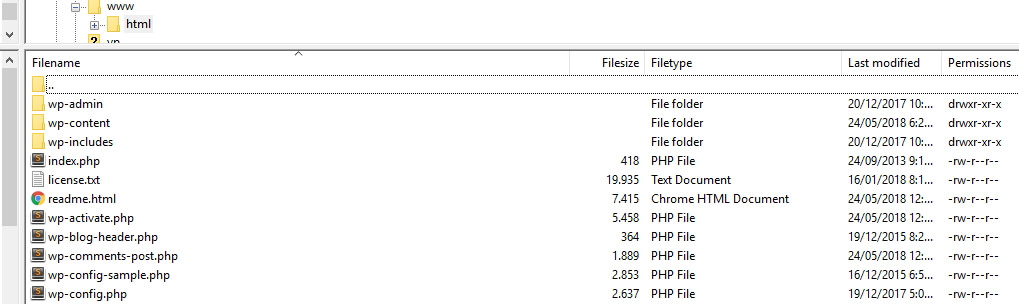

Accessing the File via FTP

For more advanced editing, accessing your robots.txt file through FTP is often recommended. Here’s how:

- Download and install an FTP client like FileZilla.

- Log in with your FTP credentials (usually provided by your hosting service).

- Navigate to the root directory of your WordPress installation.

- Look for the robots.txt file. If it’s not there, you can create a new file using a text editor.

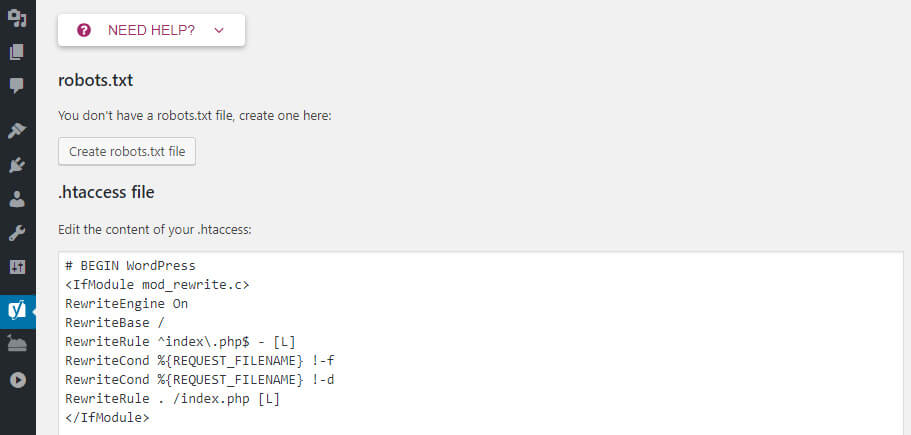

Editing Your Robots.txt File in WordPress Admin

If you prefer to edit directly within the WordPress dashboard without FTP, you can utilize a plugin. Several SEO plugins, like Yoast SEO or All in One SEO, allow you to manage your robots.txt file easily. Follow these steps:

- Install and activate one of the mentioned SEO plugins.

- Navigate to the plugin settings from your WordPress dashboard.

- Locate the robots.txt editor option within the settings panel.

- Make your desired changes to allow or disallow specific bots or pages.

Common Directives to Include

When editing your robots.txt file, there are several directives to consider:

| Directive | Description |

|---|---|

| User-agent: | Specifies which web crawler the following rules apply to. |

| Disallow: | Blocks specific pages or sections of your site from being crawled. |

| Allow: | Overrides a disallow rule to allow specific pages within a blocked section. |

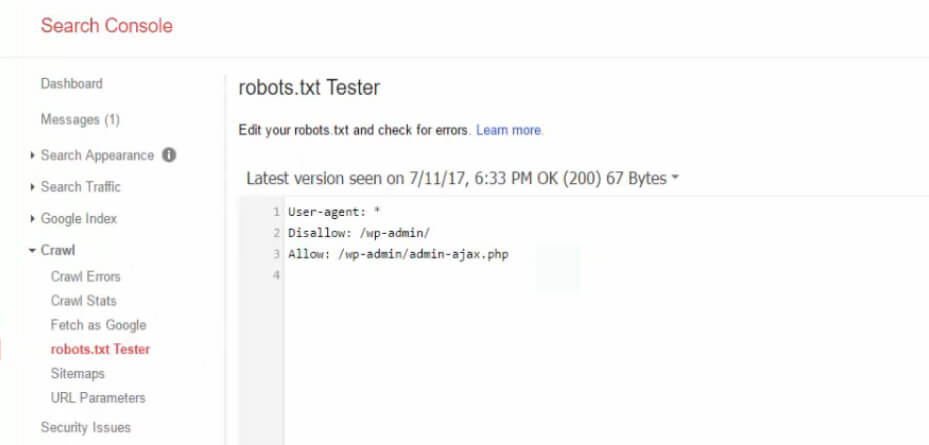

Testing Your Robots.txt File

After making edits, it’s crucial to test your robots.txt file to ensure that it’s functioning as intended. You can use tools like Google Search Console to verify that the Googlebot can access the pages you want it to. This feature allows you to check if the modifications made are correctly understood by search engines.

Conclusion

Editing your robots.txt file accurately is essential for optimizing your website’s SEO. Whether you choose to access your file through FTP or the WordPress dashboard, being intentional about what you allow or disallow can greatly influence your site’s visibility on search engines. Take your time to review and refine your directives to align with your overall SEO strategy.

3) Best Practices for Allowing and Disallowing URLs

Understand Your Website Structure

Before you dive into the specifics of allowing and disallowing URLs in your robots.txt in WordPress, it’s essential to have a clear understanding of your website’s structure. Knowing how your site is organized helps in making informed decisions about what to keep open for search engines and what to restrict access to. Consider the following:

- Hierarchy: Map out how your content is arranged.

- Importance: Determine which pages hold strategic SEO value.

- Duplicate Content: Identify any pages that could present duplication issues.

Utilize Wildcards and Directives

When formatting your robots.txt in WordPress, wildcards can be a game changer. Utilizing characters such as * and $ allows you to create more efficient rules. Here’s how to use them:

- Wildcard (*): Use it to refer to multiple pages or content. For instance,

Disallow: /category/*disallows all categories. - End Character ($): Specify URLs that end a particular way. For example,

Disallow: /*.pdf$restricts access to all PDF files.

Be Mindful of Search Engine Bots

Understanding the behavior of different search engine bots is crucial in creating your robots.txt in WordPress. Some bots respect your directives, while others may not. Ensure that your settings don’t inadvertently block essential bots, particularly the major ones like Googlebot and Bingbot.

Consider maintaining a list of user agents you want to allow or disallow. You can specify your rules like this:

| User Agent | Directive |

|---|---|

| Googlebot | Disallow: /private-directory/ |

| Bingbot | Allow: / |

| Slurp | Disallow: /temp/ |

Avoid Over-Blocking

While it may seem advantageous to block as much content as possible, over-blocking can lead to negative SEO impacts. Too many disallowed URLs may prevent search engines from indexing vital pages. A strategy of selective blocking allows you to keep control without sacrificing visibility. Focus on:

- Pages with Low Value: Disallow content that doesn’t contribute to your site’s goals.

- Staging Sites: If you have a staging environment, ensure those URLs are blocked to prevent duplicate indexing.

Test Before You Implement

Before finalizing your robots.txt in WordPress, always test your directives. Use tools like Google’s robots.txt Tester to verify that your rules are being interpreted correctly. Be particularly cautious with complex rules, as a small mistake can have an outsized impact on your site’s SEO. Testing ensures that your allowed and disallowed URLs accurately reflect your intentions and don’t block important content.

4) Utilizing Robots.txt for SEO Optimization

When it comes to optimizing your WordPress site for search engines, utilizing robots.txt can significantly enhance your SEO efforts. This straightforward text file acts as a directive that guides search engine crawlers on how to interact with your site. Understanding how to properly configure it is essential for anyone serious about driving organic traffic.

Understanding the Basics of Robots.txt

The robots.txt file is located in the root directory of your website and serves two primary purposes:

- Directing search engines about which pages to crawl or not to crawl.

- Preventing duplicate content issues by blocking access to certain sections of your site.

Common Syntax and Its Importance

To leverage this tool effectively, familiarity with its syntax is crucial. A simple example of a robots.txt file may look like this:

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php

In this example, all web crawlers are instructed not to access the WordPress admin area but are allowed to interact with the AJAX file, ensuring vital functionality while restricting unnecessary pages. This can prevent search engines from indexing your site’s backend, thus enhancing your overall security.

Best Practices for Using Robots.txt in WordPress

When optimizing your WordPress site using robots.txt, consider the following best practices:

- Prioritize important pages: Ensure that your core content is easily accessible to crawlers.

- Block non-essential elements: Pages that might dilute your SEO score, like duplicate content or admin sections, should be disallowed.

- Update regularly: As your site evolves, your robots.txt should be updated to reflect new changes or content additions.

Common Mistakes to Avoid

While utilizing robots.txt for SEO optimization is valuable, some common mistakes can sabotage your efforts:

- Blocking important assets like CSS or JavaScript, which are crucial for page rendering.

- Overgeneralizing the rules, making it difficult for search engines to index your content correctly.

- Neglecting to test your robots.txt file using Google’s Robots Testing Tool.

How Robots.txt Impacts Your WordPress SEO Strategy

Adopting the right strategy with robots.txt can set the stage for improved indexing and ranking. Here’s a brief overview:

| Strategy | Impact on SEO |

|---|---|

| Prioritize core content | Enhances visibility and user engagement. |

| Block unwanted crawlers | Focuses crawl budget on valuable content. |

| Regular updates | Adapts to site changes, maintaining SEO strength. |

In essence, utilizing your robots.txt file not only prevents unwanted indexing but also plays a pivotal role in shaping your WordPress SEO journey. A well-structured approach will translate to better performance in search rankings, thus amplifying your site’s organic reach and user engagement.

5) Common Mistakes to Avoid in Your Robots.txt File

Overlooking Duplicated Content

When curating your robots.txt file, one of the prevalent blunders is neglecting to address duplicated content. Search engines might find multiple pages on your site that offer similar information, leading to confusion about which page to rank. To circumvent this, use the robots.txt file to disallow specific sections that harbor duplicate material or utilize canonical tags on the pages instead.

Misconfigured Directives

Another common pitfall is misconfiguring or misunderstanding the directives within the robots.txt file. Some typical directives include:

- User-agent: Specifies which crawlers the following rules apply to.

- Disallow: Tells the crawler which pages or directories not to access.

- Allow: Specifically allows certain pages within a disallowed directory.

Be meticulous about correctly using these directives, as a slight error can mean granting access to sensitive areas or blocking important content unwittingly.

Ignoring Wildcards

The absence of wildcards (*) in your configuration can lead to inefficiencies. Wildcards allow for broader rules that can save time and space in your robots.txt file. For example, if you want to keep all URLs that end in .pdf disallowed, using a wildcard simplifies the entry:

User-agent: * Disallow: /*.pdf$

This effectively blocks access to all PDF files across your site without needing to specify each URL individually.

Failing to Update Regularly

A static robots.txt file can become outdated, especially after significant site changes like restructuring or redesigns. Ensure that you revisit and revise your robots.txt file regularly to reflect current content strategy and SEO practices. An outdated file may inadvertently block search engines from indexing new or crucial pages.

Not Testing Your Configuration

Lastly, failing to test your setup is a common mistake. Using tools such as Google Search Console, review whether your rules are functioning correctly. The testing tool can provide feedback on how Googlebot interprets your directives, highlighting areas where confusion may arise or where access is inadvertently granted. Committing to regular checks can significantly improve your site’s crawling efficiency and overall SEO performance.

By avoiding these critical errors in your robots.txt file, you can optimize your WordPress site’s visibility and indexing, ultimately leading to improved organic traffic and user engagement.

6) Testing Your Robots.txt File for Errors

Understanding the Importance of a Robots.txt File

When managing your WordPress site, ensuring that search engines properly crawl your content is paramount. The robots.txt file plays a vital role by instructing search engine bots on which pages to crawl and which to ignore. However, even small errors in this file can lead to significant problems, such as valuable pages being blocked from search engine indexing. Thus, testing your robots.txt file for errors is an essential step in optimizing your WordPress website.

How to Check for Errors in Your Robots.txt File

To effectively check for issues in your robots.txt file, follow these straightforward steps:

- Access Your Robots.txt File: You can view your current robots.txt file by navigating to

www.yoursite.com/robots.txt. This allows you to see the directives currently in place. - Leverage Online Tools: Utilize online testing tools such as Google’s Robots Testing Tool or other free web-based services that can analyze the syntax and structure of your robots.txt file.

- Check Crawl Errors: Search Console is an invaluable resource. It can highlight specific pages that were not crawled due to directives in your robots.txt file. Analyzer tools provide detailed reports to highlight errors.

Common Errors to Look For

As you perform your tests, be aware of the following common mistakes that could compromise your site’s SEO performance:

| Common Errors | Impact |

|---|---|

| Incorrect syntax (e.g., typos, wrong formatting) | Can prevent search engines from respecting your directives, leading to undesired pages being crawled. |

| Blocking essential pages | Impairs indexability of key pages, hindering overall SEO efforts. |

| Using disallow directives improperly | Can unintentionally block the entire site from being indexed if misconfigured. |

| Excessive wildcards | Might lead to broader disallowances, causing missing important content. |

Best Practices For Fixing Errors

If your tests reveal errors in your robots.txt file, take corrective action by employing these best practices:

- Review Directive Logic: Ensure your disallow rules are logical and cater only to non-essential pages.

- Use Comments Wisely: To clarify complex rules, add comments in your robots.txt file. This can help prevent misinterpretation in the future.

- Validate Changes: After making amendments, re-test your robots.txt file using your preferred analysis tool to confirm issues have been resolved.

By methodically testing your robots.txt file for errors, you enhance your WordPress site’s SEO performance, ensuring that it grows and thrives in the competitive digital landscape. Remember, a well-structured robots.txt file acts as your site’s gatekeeper—ensuring optimum visibility and attracting organic traffic effectively.

7) How Robots.txt Affects Crawling and Indexing

The robots.txt file plays a critical role in determining how web crawlers interact with your site, particularly in the context of robot txt in WordPress. When you configure this file correctly, it helps ensure that search engines index your content efficiently while preventing them from wasting resources accessing pages that are not relevant for indexing or may even introduce duplicate content issues.

Understanding Crawling Behavior

When a search engine sends a bot to crawl your site, it checks your robots.txt file to understand which areas of your site it can visit. This file can include directives that:

- Allow: Specify which URLs can be crawled.

- Disallow: Direct bots away from specific sections of the site.

- Sitemap: Point to your XML sitemap for efficient navigation and indexing.

This type of control helps search engines focus on your key content, ensuring that they prioritize the most important pages, thus aiding in crawling and indexing.

The Impact of Incorrect Configuration

A misconfigured robots.txt file can lead to significant issues in indexing:

- Pages you intend to be discoverable may be inadvertently blocked.

- Search engines might miss essential metadata, hindering your SEO efforts.

- Overly restrictive rules could cause your site to fail to appear in search results altogether.

To illustrate the consequences, consider the implications of disallowing certain folders, such as the /wp-admin/. While excluding admin pages is common practice, blocking your main content directory could lead to a dramatic drop in visibility.

Utilizing Tools for Verification

WordPress users can take advantage of various tools to verify their robots.txt directives. Google Search Console, for instance, provides a Robots Testing Tool that allows you to test the effects of your robots.txt file. This essential step can help avoid the pitfalls of improper configuration:

| Tool | Functionality |

|---|---|

| Google Search Console | Test and analyze crawling behavior. |

| SEMrush | Evaluate your website’s overall crawlability. |

| Ahrefs | Check for blocked pages and URLs. |

Best Practices for WordPress Users

To enhance your robot txt in WordPress strategy, consider the following best practices:

- Regular Updates: Review and update your robots.txt file as your site evolves.

- Limit Disallow Directives: Use them sparingly to avoid blocking useful content.

- Focus on Important Pages: Make sure your essential pages and posts are accessible to web crawlers.

- Test Before Going Live: Always ensure that your file is working as expected before your changes go into effect.

By implementing these strategies, you can maintain a healthy balance between protecting sensitive data and maximizing your site’s visibility in search engine results, which is essential for building organic traffic through robots.txt management.

8) Managing Multiple Websites with One Robots.txt

Streamlining Your SEO Strategy

Managing multiple websites can be a daunting task, especially when it comes to ensuring that search engines correctly index your content. Fortunately, savvy use of a single robots.txt file can simplify this process substantially. By centralizing your directives in one file, you can effectively control and optimize how search engines interact with all your sites, thus enhancing your overall SEO strategy.

Benefits of a Unified Robots.txt

Utilizing one robots.txt for multiple sites comes with several key advantages:

- Simplified Management: A single point of control means less time spent updating files across various sites.

- Consistency in Directives: Avoids discrepancies between settings, ensuring all sites reflect the same SEO intentions.

- Ease of Updates: If you need to make changes, doing it in one file saves both time and effort.

- Clear Structure: Allows for a more organized approach to crawling directives across different domains.

Points to Consider

While a single robots.txt file can offer efficiency, there are some caveats to keep in mind:

- Domain Specifications: Ensure that the directives specified are relevant and tailored to each corresponding domain.

- Potential for Errors: A mistake in a single file could adversely affect multiple sites, amplifying the impact of any erroneous code.

- Access Restrictions: Verify that sensitive areas are adequately protected for each website to avoid unintentional exposure.

Best Practices for Combining Robots.txt Files

To effectively manage multiple websites with one robots.txt file, adhere to these best practices:

| Best Practice | Description |

|---|---|

| Clear Organization | Structure your robots.txt to delineate directives for each site clearly. |

| Thorough Testing | Use tools like Google’s robots.txt Tester to verify your rules before implementation. |

| Regular Audits | Periodically review your directives to ensure they’re still aligned with your SEO goals. |

By incorporating these techniques, you can maximize the effectiveness of your combined robots.txt strategy. Your approach not only reflects a thorough understanding of the robots.txt functionality but also positions your various websites to achieve optimal indexing, leading to an increase in organic traffic across your online portfolio.

9) Integrating Robots.txt with Other SEO Tools

Utilizing a Comprehensive SEO Ecosystem

Integrating your robots.txt file with other SEO tools can create a powerful SEO ecosystem that maximizes your WordPress site’s performance. By synchronizing settings and data across these tools, you can ensure that your instructions for search engine crawlers align with your overall optimization strategy.

- Google Search Console: Start by linking your WordPress site to Google Search Console. This tool provides insights into how Google indexes your site. You can test and validate your robots.txt file, ensuring that there are no restrictions that could hinder your site’s visibility.

- SEO Plugins: Plugins like Yoast SEO or All in One SEO Pack allow you to manage your robots.txt directly from your WordPress dashboard. Modify settings without diving into code. These plugins often suggest optimized configurations to enhance your site’s crawlability.

Analyzing Crawl Errors and Optimization

Once you have integrated your robots.txt with these tools, take advantage of their features to analyze and rectify crawl errors. Tools like Screaming Frog and SEMrush can help you audit your website, providing information on blocked URLs, which aids in optimizing your robots.txt instructions.

A structured audit can look something like this:

| Error Type | Impact | Recommended Action |

|---|---|---|

| Blocked Pages | Low Search Visibility | Update robots.txt to allow indexing |

| Disallowed Directories | Loss of Valuable Content | Reassess which directories to block |

| Redirect Chains | Slow Crawl Rate | Eliminate unnecessary redirects |

Enhancing Local SEO with Geo-Targeting Tools

For local businesses, integrating local SEO tools can further enhance website visibility. If your robots.txt file inadvertently blocks essential local pages, you could miss out on valuable traffic. Use instruments like Moz Local or BrightLocal to ensure your geo-targeting efforts align effectively with your robots.txt settings.

Be mindful that knowingly blocking directories containing local landing pages can severely impact your reach in local search results. Tools that provide insights into local rankings can also guide you in making informed decisions regarding the adjustment of your robots.txt entries.

Utilizing Analytics for Continuous Improvement

Analyze user behavior through Google Analytics to gain insights into how visitors interact with your site. Pay attention to traffic flow and identify any abrupt drops that may correlate with changes to your robots.txt file. If certain sections see reduced traffic, it may be time to reassess your file configurations.

By regularly cross-referencing data from your various SEO tools, you can create a responsive and adaptive strategy that leads to improved visibility and performance for your site. Each element, from robots.txt setups to analytics tracking, can be meticulously aligned to build a seamless SEO interface that works in your favor.

10) Keeping Your Robots.txt Updated After Website Changes

Why Regular Updates Matter

Maintaining an accurate robots.txt file is crucial for search engine optimization in WordPress. Every time you make significant changes to your website—be it new plugins, redesigns, or content strategy revisions—your robots.txt needs to reflect those shifts. Neglecting this can lead to unintended consequences, such as search engines crawling areas of your site that should remain hidden.

Common Situations Requiring Updates

Here are common scenarios where your robots.txt file may need adjustments:

- New Plugins: If you install SEO or caching plugins, they could introduce changes that affect how your content is indexed.

- Site Redesign: Major layout changes may require you to hide certain paths or folders that were previously public.

- Content Strategy Shifts: If you decide to focus on particular topics or products, ensuring your robots.txt aligns with this new focus is vital.

- Additional Languages: For multilingual sites, adjustments may be necessary to ensure proper indexing for each language version.

How to Update Effectively

Updating your robots.txt file in WordPress can be straightforward. Follow these best practices to ensure smooth updates:

- Backup Your Current File: Before making any changes, always create a backup of your existing

robots.txt. This allows you to restore previous settings if needed. - Use an SEO Plugin: Many WordPress SEO plugins, like Yoast SEO or All in One SEO, come with built-in tools to manage your robots.txt easily.

- Test After Updates: Utilize the Google Search Console to test your robots.txt file. This helps in identifying issues like disallowed URLs that should be crawlable.

Actual Examples of Effective Robots.txt Files

A well-structured robots.txt can serve as a model. Here are some templates based on typical WordPress setups:

| Site Type | Example Robots.txt |

|---|---|

| Standard Blog | User-agent: * |

| eCommerce Store | User-agent: * |

| Membership Site | User-agent: * |

These examples illustrate how specific paths can be blocked to optimize indexing and improve the site’s SEO performance. Each time you implement changes, revisit your robots.txt file and adjust accordingly to maintain clarity and effectiveness.

What is a robots.txt file and why is it important for my WordPress site?

The robots.txt file is a simple text file placed on your web server that instructs search engine crawlers about which pages or sections of your site they can or cannot access. It’s important because it helps manage your site’s visibility on search engines, ensuring that only the most important pages are indexed. For more insights, check out Wikipedia.

How do I create or edit a robots.txt file in WordPress?

To create or edit a robots.txt file in WordPress, follow these steps:

- Go to your WordPress dashboard.

- Navigate to SEO > Tools.

- Select Edit robots.txt.

- Make your changes and save the file.

Alternatively, you can use an FTP client to access the root directory of your website and create or modify the robots.txt file directly.

What should I include in my robots.txt file?

Your robots.txt file can contain various directives, such as:

- User-agent: Specify which search engine bots the directives apply to.

- Disallow: Prevent specific pages or directories from being crawled.

- Allow: Grant permission for certain pages within disallowed directories.

- Sitemap: Include a link to your XML sitemap for better indexing.

Make sure this information is tailored to your site’s needs and priorities.

Can I use a plugin to manage my robots.txt file?

Yes, several WordPress SEO plugins like Yoast SEO and All in One SEO Pack allow you to easily manage your robots.txt file without any coding. These plugins provide user-friendly interfaces to make edits, which can be especially helpful for less technical users.

How can I test my robots.txt file after editing?

To test your robots.txt file, you can use Google’s Robots Testing Tool, available in Google Search Console. This tool lets you check how Googlebot interprets your directives and ensures that your intended pages are open for crawling. For guidance, see Google Support.

What common mistakes should I avoid in my robots.txt file?

Common mistakes include:

- Accidentally blocking important pages (like your homepage).

- Using incorrect syntax.

- Failing to account for trailing slashes.

- Not updating the file as your site evolves.

Double-check your directives to prevent potential search visibility issues.

How do search engines interpret the directives in my robots.txt file?

Search engines follow the directives specified in the robots.txt file to determine which pages to crawl and index. They prioritize the pages accessible per the rules and may ignore conflicting instructions. For a deeper understanding, refer to Moz.

What is the difference between robots.txt and meta tags?

While robots.txt manage the crawling behavior of search engines on a broader scale, meta tags apply to individual pages. Meta tags offer a way to control indexing and archiving on a page-specific level. Using both in conjunction enables more granular control over SEO.

How does robots.txt affect my website’s SEO performance?

A well-structured robots.txt can enhance your SEO performance by allowing search engines to focus on indexing valuable content instead of irrelevant pages, potentially improving your site’s ranking. Conversely, misconfigured files can hinder visibility, so regular audits are recommended.

What are the limitations of using robots.txt?

While robots.txt is a useful tool, it comes with limitations:

- It cannot enforce compliance—some bots may ignore the rules.

- Sensitive information should not be excluded via robots.txt, as it does not provide security.

- Disallowed pages can still be discovered through external links.

For ultimate protection of sensitive data, use password protection or HTTP authentication instead.

Outro: Wrapping Up Your Robot.txt Mastery in WordPress

Congratulations! You’ve journeyed through the essential tips for mastering the robots.txt file in your WordPress site. By now, you’re equipped with the knowledge to optimize your site’s crawlability, enhance your SEO efforts, and ultimately improve your online visibility. Remember, the robots.txt file is more than just a technical necessity; it’s a powerful tool in your digital arsenal that can guide search engine bots and control what they access on your website.

As you implement these tips, keep in mind that monitoring your site’s performance and adjustments is equally crucial. Regularly checking your robots.txt file and analyzing its impact on your SEO strategy can help you stay ahead of the competition.

For further reading and a deeper understanding of robots.txt nuances, consider exploring Yoast’s comprehensive guide on the subject. There’s always room for growth in the world of SEO, and every small adjustment can lead to significant gains.

So, is your robots.txt ready to take your WordPress site to new heights? Embrace the power of efficient crawling, and watch your online presence thrive! Happy optimizing!